CISO Briefing: OpenClaw Risk Landscape and Hardening Priorities

OpenClaw's viral rise has exposed critical security gaps. This CISO briefing covers the threat landscape, hardening priorities, and a practical 30-day action plan for AI agent deployments.

CISO Briefing: OpenClaw Risk Landscape and Hardening Priorities

OpenClaw's viral rise has exposed critical security gaps. This CISO briefing covers the threat landscape, hardening priorities, and a practical 30-day action plan for AI agent deployments.

Last updated: 5 February 2026.

This briefing is current as of February 5, 2026. The OpenClaw security landscape is evolving rapidly. Revisit recommendations and monitor the sources below for updates.

-

What CISOs Need to Know About OpenClaw This Week

OpenClaw (formerly Clawdbot, then briefly Moltbot) is an open-source AI agent that has gone from obscurity to 100,000+ GitHub stars in under two weeks. Users are buying dedicated Mac Minis to run it. The security implications are significant.

Unlike chatbots that answer questions, OpenClaw is an agent that takes actions: it reads and writes files, executes shell commands, controls browsers, sends emails, makes API calls, and interacts with SaaS platforms - continuously, autonomously, and with whatever permissions its host machine has.

The security situation as of this week:

- 42,665 OpenClaw instances have been found exposed to the public internet, with 93.4% lacking authentication (Maor Dayan, January 31, 2026)

- 340+ malicious skills have been catalogued in the official skills marketplace (The Hacker News, February 2, 2026)

- Commodity infostealers (RedLine, Lumma, Vidar) have already been updated to specifically target OpenClaw configuration files (VentureBeat, January 29, 2026)

- A supply chain attack was demonstrated against the official ClawdHub skills library, with a proof-of-concept "What Would Elon Do?" skill downloaded by users in seven countries before being flagged

- Security researcher Jamieson O'Reilly demonstrated a supply chain attack against ClawdHub by uploading a skill called 'What Would Elon Do?', artificially inflating its download count to 4,000+, and watching as developers from seven countries installed it (Cyber Unit Security, February 1, 2026)

- Archestra AI's CEO extracted a cryptocurrency private key from a compromised instance via prompt injection in under five minutes (Cyber Unit Security, February 1, 2026)

The scary reality is your employees may already be running instances connected to corporate email, calendars, Slack, and internal systems compromising critical information.

How OpenClaw Actually Works, and Its Risks

OpenClaw markets itself as "Your assistant. Your machine. Your rules." The architecture is local-first: the agent runs on your hardware, connects to your chosen LLM provider (Anthropic, OpenAI, Google, or local models), and integrates with your existing apps.

From a risk perspective, here's how it’s working:

The Gateway is the core component. It's a Node.js server that:

- Maintains persistent state (conversation history, preferences, "memories")

- Stores credentials for connected services in plaintext Markdown and JSON files

- Exposes a WebSocket and HTTP API for control

- Defaults to trusting all localhost connections without authentication

- Intends to apply token-based auth, but has had a number of critical CVE’s that have undermined this

Tools and Skills extend what the agent can do. Out of the box, OpenClaw can:

- Read/write files anywhere the user has access

- Execute arbitrary shell commands

- Control browsers via automation

- Send and receive messages through WhatsApp, Telegram, Slack, Discord, Teams, iMessage

- Make API calls to any service it has credentials for

The Skills Marketplace (ClawdHub) allows anyone to publish extensions. The developer documentation explicitly states that downloaded code is treated as trusted. There is no moderation process.

Where the credentials live:

- API keys (Anthropic, OpenAI, etc.):

~/.openclaw/config.json - OAuth tokens (Slack, Google, etc.):

~/.openclaw/tokens/ - Chat histories and context:

~/.openclaw/memory/ - User preferences and system prompts: plaintext in the config directory

The authentication gap:When deployed behind a reverse proxy (a common configuration for remote access), the gateway interprets all connections as coming from localhost, effectively disabling authentication for everyone. This misconfiguration is why tens of thousands of instances are publicly exposed.

There have been numerous vulnerabilities that have blown away any authentication steps, including the accidental automatic trust of local proxies

The "Obedient Monkey" Model: A Simple Way to Explain Agent Risk

When briefing non-technical executives or board members on AI agent risk, the details above will lose your audience. We've developed a simpler framework that lands well in conversations.

The core metaphor: AI agents are like obedient monkeys. They're capable, fast, eager to please and try and will do whatever they're told - but they can't distinguish between their owner and anyone else who happens to speak to them.

This single concept explains:

- Why prompt injection is dangerous (anyone who can reach the monkey can give it instructions)

- Why scope of access matters (an obedient monkey with your Uber account is very different from one with your life savings)

- Why perimeter security assumptions don't apply (the monkey processes inputs from emails, documents, and websites, all potential attack vectors)

For a detailed breakdown of this framework, including three questions to ask at your next board meeting, see our companion article: The Obedient Monkey: A Framework for AI Agent Risk Your Board Will Remember.

Core Threats: What Can Go Wrong With OpenClaw in Your Environment

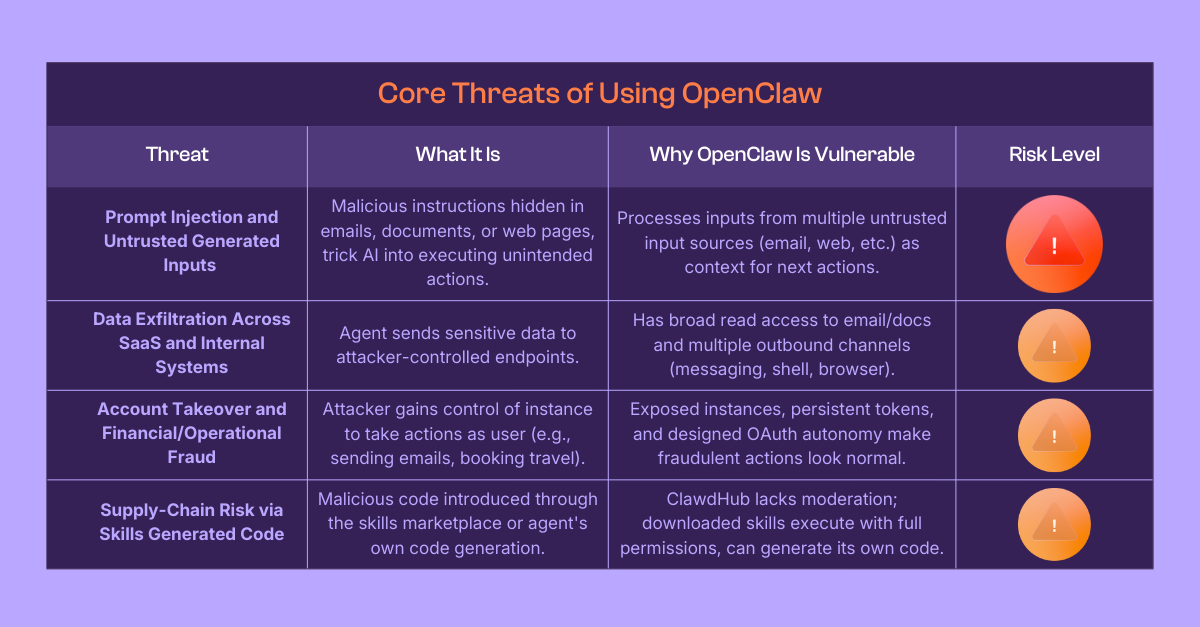

Prompt Injection and Untrusted Inputs

What it is: Malicious instructions hidden in emails, documents, or web pages that trick the AI into executing actions that were unintended by the original user/owner of the agent.

Why OpenClaw is especially vulnerable: The agent processes inputs from multiple untrusted sources, email bodies, attachments, calendar invites, Slack messages, websites it browses and treats them all as context for its next action. An attacker doesn't need to compromise the agent directly; they just need to reach it with a crafted message.

Demonstrated attacks:

- Archestra AI's CEO extracted a cryptocurrency private key via a crafted email prompt in under five minutes

- Cisco's security team tested the "What Would Elon Do?" skill and found it actively exfiltrated data and bypassed safety guidelines

- Researchers have demonstrated browser-based attacks where a malicious webpage instructs the agent to exfiltrate credentials

Risk level: Critical.

Prompt injection is an unsolved problem across all LLM-based systems. OpenClaw's broad access makes the consequences more severe.

Data Exfiltration Across SaaS and Internal Systems

What it is: The agent, whether through malicious instruction or compromised skill, sends sensitive data to attacker-controlled endpoints.

Why OpenClaw is especially vulnerable: The agent routinely has:

- Read access to email, calendar, and documents

- Write access to messaging platforms (can send data outbound)

- Shell access (can use curl, wget, or any installed tool)

- Browser control (can be instructed to send data to any website, including attacker-controlled endpoints)

Traditional Data Security Posture Management solutions don't inspect traffic from AI agents the same way they inspect user traffic. The agent operates outside normal proxy/endpoint patterns.

Demonstrated attacks:

- The "What Would Elon Do?" skill was found to contain code that sent user data to external servers

- Exposed instances have been observed with full conversation histories (including sensitive business discussions) accessible without authentication

- The Moltbook database exposure (January 31, 2026) revealed that even third-party platforms in the OpenClaw ecosystem can become exfiltration points

Risk level: High.

The combination of broad read access and multiple outbound channels makes exfiltration straightforward once an attacker has any foothold.

Account Takeover and Financial/Operational Fraud

What it is: An attacker gains control of an OpenClaw instance and uses it to take actions as the user, sending emails, approving transactions, booking travel, etc.

Why OpenClaw is especially vulnerable:

- The 42,665 exposed instances represent ready-made attack surfaces

- Many instances run with persistent OAuth tokens that don't expire

- The agent is designed to take actions autonomously, booking flights, sending messages, filling out forms, so fraudulent actions look like normal operations

Demonstrated attacks:

- Security researcher Jamieson O'Reilly found instances where unauthenticated users could execute arbitrary commands on host systems running with root privileges

- One exposed instance belonging to an AI software agency allowed full command execution with elevated privileges

- A user's Signal messenger pairing credentials were found in globally readable temporary files on a public instance

Risk level: High.

Exposed instances can be discovered via Shodan in seconds using known HTML fingerprints.

Supply-Chain Risk via Skills and Auto-Generated Code

What it is: Malicious code introduced through the skills marketplace, third-party integrations, or the agent's own code-generation capabilities.

Why OpenClaw is especially vulnerable:

- ClawdHub has no moderation or review process

- Downloaded skills are treated as trusted and execute with the agent's full permissions

- OpenClaw can generate and execute its own code to create new "skills" on the fly

Demonstrated attacks:

- Security researcher Jamieson O'Reilly uploaded a proof-of-concept skill to ClawdHub, artificially inflated the download count to 4,000+, and watched users from seven countries install it

- 335+ malicious skills have already been catalogues, with one author (hightower6eu) accounting for nearly 7,000 downloads

- A fake VS Code extension ("ClawdBot Agent - AI Coding Assistant") deployed remote access tools when the IDE launched (removed by Microsoft, January 27, 2026)

Risk level: High.

Supply chain attacks are inherently difficult to detect, and the lack of moderation makes ClawdHub a particularly soft target.

Where the Biggest Exposures Are Today

Employees Installing Agents on Laptops

The scenario: An employee installs OpenClaw on their work laptop "just to try it out." They connect their corporate Google account, Slack workspace, and GitHub credentials. The agent runs in the background.

The exposure:

- The agent now has persistent access to email, calendar, code repositories, and internal communications

- Any prompt injection reaching that employee’s inbox can leverage that access

- The

~/.openclaw/directory contains credentials that will persist even if the agent is stopped - If the laptop is compromised by malware, OpenClaw's credential store is a high-value target (infostealers already target it specifically)

Detection difficulty: High. The agent looks like normal user activity. Traffic goes to legitimate SaaS endpoints.

Publicly Exposed Gateways and Missing Authentication

The scenario: An employee or team sets up OpenClaw on a VPS or homelab for remote access. They put it behind a reverse proxy but misconfigure the authentication.

The exposure:

- The gateway trusts localhost, and the proxy makes all connections appear to come from localhost

- Anyone on the internet can connect and issue commands

- Full conversation history, stored credentials, and command execution are available without authentication

- Shodan can discover these instances in seconds via known fingerprints

Detection difficulty: Low if you're looking. Run a Shodan query for OpenClaw fingerprints and check if any results belong to your IP space.

Over-Permissive Tools and Inherited User Permissions

The scenario: The agent is granted access to a service (e.g., Google Workspace) using the user's OAuth token. The user has broad permissions. The agent inherits all of them.

The exposure:

- The agent can access anything the user can access - including shared drives, team calendars, and documents shared with broad permissions

- There's no way to scope the agent's access below the user's permission level without creating a dedicated service account

- Skills and prompt injections can leverage the full permission set

Detection difficulty: Moderate. OAuth grant logs will show the connection, but distinguishing legitimate agent activity from malicious activity requires behavioral analysis.

Shadow Agents Outside Security's Line of Sight

The scenario: Employees across the organization install OpenClaw independently. Security has no visibility into how many instances exist, what they're connected to, or what they're doing.

The exposure:

- No inventory of agent deployments

- No standardisation of security configurations

- No monitoring or logging of agent activity

- Potential for any employee's machine to become an attack vector

Detection difficulty: Very high. Requires endpoint telemetry that specifically looks for OpenClaw processes and directories.

.png)

Hardening Priorities for CISOs (First 30 Days)

Policy: What's Allowed and What Isn't

Immediate actions:

- Issue an interim AI agent policy covering:

- Whether OpenClaw and similar tools are permitted on corporate devices. At Metomic we issued a company-wide announcement prohibiting the use of OpenClaw on company devices.

- What data sources agents may connect to (if any)

- Approval requirements before any agent deployment

- Incident reporting requirements if an agent is compromised

- Classify AI agents as privileged software. They should require the same approval process as VPN clients, remote access tools, or admin utilities.

- Prohibit agent connections to production systems until formal risk assessment is complete.

- Require dedicated, isolated machines for any sanctioned experimentation.

Architecture: Isolation, Sandboxing, and Network Boundaries

Immediate actions:

- One of the very first things any OpenClaw user should do is run

openclaw security audit --fixas per OpenClaw’s documentation here. - Mandate VM or container deployment. Agents should never run directly on workstations with access to sensitive data.

- Enforce network segmentation. Sandbox environments should have:

- No access to production networks

- No access to internal APIs

- Restricted outbound access (whitelist only)

- Bind the gateway to localhost. Never expose the gateway port externally, even behind a reverse proxy.

- Deploy dedicated egress controls. AI agent traffic should pass through a proxy that can inspect and log outbound requests.

- Block ClawdHub by default. Whitelist only skills that have been reviewed and approved.

Identity & Access: Scoping, Least Privilege, and Secrets Management

Immediate actions:

- Create dedicated service accounts for any permitted agent integrations. Never use personal OAuth tokens.

- Scope permissions to the minimum required. If the agent only needs to read calendar free/busy, don't grant full calendar access.

- Rotate credentials used by agents on a shortened schedule (e.g., weekly instead of quarterly).

- Move secrets out of plaintext config files. Use system keychain, environment variables or a secrets manager. Never store tokens in

~/.openclaw/. - Require MFA for any service the agent connects to. In the event of malicious activity or credential exfiltration, this is of very low impact - but at the very least it helps to make privilege escalation more difficult.

Monitoring & Response: Logging, Anomaly Detection, and Kill-Switches

Immediate actions:

- Enable verbose logging on any permitted agent deployment. Log all:

- Commands executed

- Files accessed or modified

- API calls made

- Messages sent or received

- Integrate agent logs with your SIEM. Create alerts for:

- Shell command execution

- Access to sensitive file paths

- Outbound connections to unfamiliar domains

- High-volume API calls

- Establish a kill-switch process. Know how to:

- Immediately revoke all OAuth tokens associated with an agent

- Terminate the agent process remotely

- Isolate the host machine from the network

- Add OpenClaw directories to your endpoint detection rules. Flag any process accessing

~/.openclaw/from outside the agent itself.

How to Talk About OpenClaw With Your Board and Exec Team

Framing Risk vs. Innovation

The board will hear about OpenClaw. Staff are excited and the productivity potential is tangible. Our job isn't to say "no", but we need to enable safe experimentation while preventing catastrophic outcomes.

Talking points:

"AI agents like OpenClaw represent a real capability leap, they can take actions, not just answer questions. Our staff want to use them, but our job is to create the conditions for safe experimentation so we can capture the benefits without creating a breach."

"The risk isn't the AI itself, it's the access we give it. We need to be deliberate about what levers we put in the agent's hands and who else can influence what it does."

"This is 1999 internet adoption all over again. The technology is outpacing governance. Companies that figure out how to move fast with guardrails will win. Companies that move too slow will get disrupted. Companies that move fast without guardrails will end up in the headlines."

Using the Monkey Metaphor to Explain Blast Radius and Controls

For non-technical audiences, the obedient monkey framework translates security concepts into intuitive terms:

On access scope:"We're giving the monkey keys to the building. The question is: which doors should those keys open? Right now, most deployments give the monkey the master key when all it needs is access to one room."

On prompt injection:"The monkey will do whatever it's told. The problem is, it can't tell the difference between instructions from us and instructions hidden in an email or document. Anyone who can reach the monkey can give it orders."

On monitoring:"We need to watch what the monkey does, not just what we tell it to do. If it starts behaving strangely, accessing files it shouldn't, sending messages at odd hours, connecting to unfamiliar systems, we need to know immediately."

Appendix: Sources

- Cyber Unit Security – "Clawdbot Update: From Viral Sensation to Security Cautionary Tale in One Week"February 1, 2026https://cyberunit.com/insights/clawdbot-moltbot-security-update/

- Jamieson O'Reilly (Dvuln) – Original exposure research, 900+ vulnerable instances identifiedJanuary 25–26, 2026https://x.com/JamiesonOReilly

- Maor Dayan – 42,665 exposed instances, 93.4% with authentication bypassJanuary 28–31, 2026https://x.com/mikifreimann/status/1884621802117914947

- VentureBeat – "Infostealers added Clawdbot to their target lists before most security teams knew it was running"January 29, 2026https://venturebeat.com/security/clawdbot-exploits-48-hours-what-broke

- Aikido Security – Fake VS Code extension discovery (ScreenConnect RAT)January 27, 2026https://www.aikido.dev/blog/clawdbot-malicious-vscode-extension

- 404 Media – "AI Agent Social Network Database Left Wide Open"January 31, 2026https://www.404media.co/ai-agent-social-network-moltbook-database-left-wide-open/

- Cisco Talos / Martin Lee – "What Would Elon Do?" skill analysis (data exfiltration, prompt injection)January 30, 2026https://x.com/mrtlee/status/1884948266310385952

- OpenSourceMalware.com – 230+ malicious skills catalogued in ClawdHubJanuary 2026https://opensourcemalware.com

- Trending Topics EU – "Clawdbot: Hyped AI agent risks leaking personal data, security experts warn"January 27, 2026https://www.trendingtopics.eu/clawbot-hyped-ai-agent-risks-leaking-personal-data-security-experts-warn/

- DigitalOcean – "What is OpenClaw?" (technical architecture overview)February 2026https://www.digitalocean.com/resources/articles/what-is-openclaw

- AI CERTs News – "OpenClaw surge exposes thousands, prompts swift security overhaul"January 2026https://www.aicerts.ai/news/openclaw-surge-exposes-thousands-prompts-swift-security-overhaul/

- Heather Adkins (Google Security Team) – Public advisory: "Don't run Clawdbot"January 2026Cited in Cyber Unit, VentureBeat

- SlowMist – Blockchain security analysis, $CLAWD token scam documentationJanuary 27, 2026Cited in Cyber Unit

This briefing is current as of February 5, 2026. The OpenClaw security landscape is evolving rapidly. Revisit recommendations and monitor the sources above for updates.